In this post I show how to customize your pipeline and other external files in Azure DevOps using variables from the Library. Often, companies want to improve their internal processes for a real digital transformation. As a developer, I try to introduce Azure and Azure DevOps to dramatically improve the performances of the development department.

In my previous posts, I gave you the naming convention schema you can use for you resources in Azure, how to deploy apps with Docker and release appservices with pipelines.

What I want to achieve

So, I started to investigate how to create a repository template for Shiny application with R. The researchers don’t know anything about pipelines or code. They only want to have a quick way to see their applications on a server. In the configuration of the applications (not the usual files from .NET), there are sensible data such as the password to access the server that I don’t want to distribute. Similar issues, in the DOCKERFILE. Then, the azure-pipeline.yml where there are same global configurations to connect to other service on Azure Virtual Machines.

Then, what I want to achieve is to use:

- the variables Library in Azure DevOps across the repositories

- use the variables in the

azure-pipeline.yml - add the values from the library in the external files

Example of Azure pipeline

First, this is an example of the pipeline I want to use. I want to use all the variables from the Library.

trigger:

- main

resources:

- repo: self

variables:

# Container registry service connection established during pipeline creation

dockerRegistryServiceConnection: '2ce0b4b1'

imageRepository: 'shinyappimage'

containerRegistry: ''

dockerfilePath: '$(Build.SourcesDirectory)/DOCKERFILE'

tag: '$(Build.BuildId)'

# Agent VM image name

vmImageName: 'ubuntu-latest'

stages:

- stage: Build

displayName: Build and push stage

jobs:

- job: Build

displayName: Build

pool:

vmImage: $(vmImageName)

steps:

- task: Docker@2

displayName: Build and push an image to container registry

inputs:

command: buildAndPush

repository: $(imageRepository)

dockerfile: $(dockerfilePath)

containerRegistry: $(dockerRegistryServiceConnection)

tags: |

latest

- task: SSH@0

displayName: 'Run shell commands on remote machine'

inputs:

sshEndpoint: 'ssh'

commands: |

sudo docker pull $(containerRegistry)/$(imageRepository)

failOnStdErr: false

continueOnError: true

Example of external configuration file

Second, this is an example of external files. You can see there are some sensible values such as clientId and clientSecret that I don’t want to share.

# Maintainer #{Maintainer}#

proxy:

title: Open Analytics Shiny Proxy

port: 8080

authentication: openid

openid:

auth-url: https://youridentityserver/connect/authorize

token-url: https://youridentityserver/connect/token

jwks-url: https://youridentityserver/.well-known/openid-configuration/jwks

logout-url: https://youridentityserver/Account/Logout?return=https://yoururl/

client-id: 'clientId'

client-secret: 'clientSecret'

scopes: [ "openid", "profile", "roles" ]

username-attribute: aud

docker:

internal-networking: true

# url setting needed FOR WINDOWS ONLY

# url: https://host.docker.internal:2375

specs:

- id: 01_hello

display-name: Hello Application

description: Application which demonstrates the basics of a Shiny app

container-cmd: ["R", "-e", "shinyproxy::run_01_hello()"]

container-image: openanalytics/shinyproxy-demo

container-network: sp-example-net

- id: 06_tabsets

container-cmd: ["R", "-e", "shinyproxy::run_06_tabsets()"]

container-image: openanalytics/shinyproxy-demo

container-network: sp-example-net

logging:

file:

opt/shinyproxy/shinyproxy.log

spring:

servlet:

multipart:

max-file-size: 200MB

max-request-size: 200MB

So, it seems easy but I spent a lot of time to understand how to do that. Let’s go.

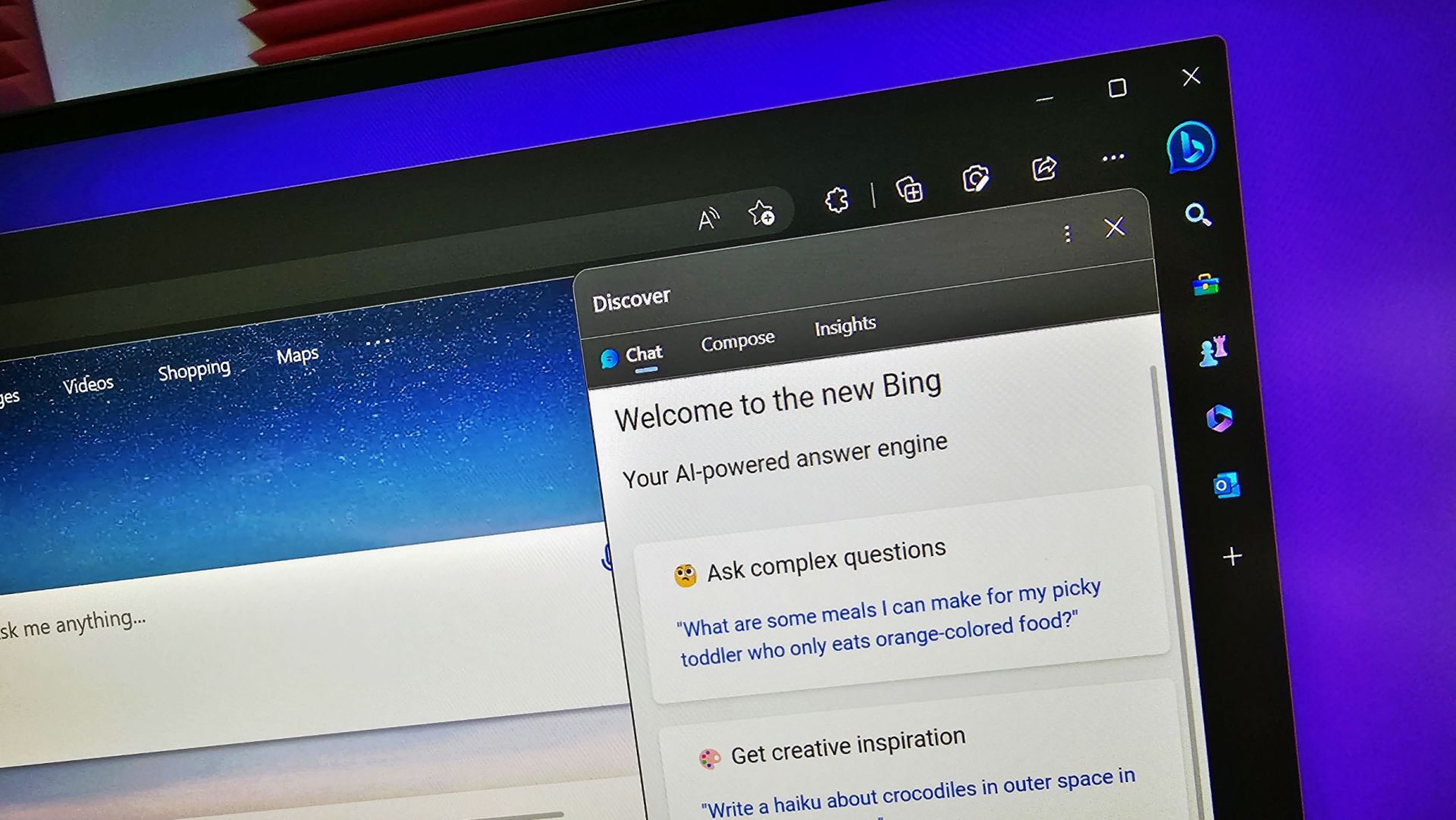

Variables Library in Azure DevOps

In Azure DevOps, there is a Library where you can store variables to use across projects.

To create a new library, just click on + Variable group.

Then, decide what is the Variable group name, leave Allow access to all pipelines checked and add your variables. Then, press Save.

So, this part is done. One important thig is in the pipeline you have also variables. Those variables are valid only in this pipeline, not to all of them.

Link variable Library with a pipeline

I found this configuration a bit confuses. If you use Variables in the pipeline page, those variables are valid only for this pipeline. To link a Library, you have to edit the pipeline first.

Then, click on the 3 dots on the top right, and then select Triggers, like in the following image.

So, you are redirected to the following page.

Now, click on Variables.

And then, click on Variables groups.

Then, you can click on the Link variables group button and a property popup appears on the right. Select from the list, the group you want to link and then click on the Link button.

Specify variables in the pipeline

After that, in your azure-pipeline.yml, it is possible to use the variables from the Library. For that, you have to use the following syntax

$(variable)

There is not distinction between the variables in the pipeline and the variables in the variable groups. You always use them with the above syntax.

Add conditions for an empty variable

Because you want to customize your pipeline in Azure DevOps, sometimes it is useful to check if a variable is empty. If it is so, stop the process.

For example, I want to be sure the researcher changed the imageRepository in the azure-pipeline.yml.

What can I do? Under jobs, I added a new job called Check that it is responsible to verify if the variable is empty or not. For that, I use the command condition. For example:

stages:

- stage: Build

displayName: Build and push stage

jobs:

- job: Check

condition: eq('${{ variables.imageRepository }}', '')

steps:

- script: |

echo '##[error] The imageRepository must have a value!'

exit 1

As you can see in the code above, I want to run the steps only if the variable is empty. eq(...) is the command to check if a variable is ugual to another one. To specified a variable, I have to use '${{ variables.imageRepository }}'. To stop the pipeline and raise an error, I use exit 1.

Full example in azure-pipeline.yml

So, the following code is the implementation of using local variables, variable groups and conditions. For more details about condition, see the Microsoft documentation.

trigger:

- main

resources:

- repo: self

variables:

# Container registry service connection established during pipeline creation

dockerRegistryServiceConnection: ''

# Insert a name for your container

imageRepository: ''

containerRegistry: $(ACRLoginServer)

dockerfilePath: '$(Build.SourcesDirectory)/DOCKERFILE'

tag: '$(Build.BuildId)'

# Agent VM image name

vmImageName: 'ubuntu-latest'

stages:

- stage: Build

displayName: Build and push stage

jobs:

- job: Check

condition: eq('${{ variables.imageRepository }}', '')

steps:

- script: |

echo '##[error] The imageRepository must have a value!'

exit 1

- job: Build

condition: not(eq('${{ variables.imageRepository }}', ''))

displayName: Build

pool:

vmImage: $(vmImageName)

steps:

- task: Docker@2

displayName: Build and push an image to container registry

inputs:

command: buildAndPush

repository: $(imageRepository)

dockerfile: $(dockerfilePath)

containerRegistry: $(dockerRegistryServiceConnection)

tags: |

latest

- task: SSH@0

displayName: 'Run shell commands on remote machine'

inputs:

sshEndpoint: 'ShinyServerDev'

commands: |

echo $(SSHPassword) | sudo -S docker pull $(containerRegistry)/$(imageRepository)

failOnStdErr: false

continueOnError: true

Use variable in external files

After all, you can Customize your pipeline in Azure DevOps but it is not enough. Another important thing is how to use the variables in static files or external files. For external files, I mean files that Azure doesn’t recognize as part of the pipeline itself. For example, you don’t want to distribute sensible information in the web.config of your ASP.NET application or the appsetting.json of your ASP.NET Core application.

For example, in my case, I don’t want to share the application.yml

So, thanks to the support of the Visual Studio community, I found an extension for DevOps called Replace Tokens in the marketplace.

This extension is working for classic pipeline and for YAML files. Also, it provides a pipeline task to replace tokens in files with variables. In the external file, if you set #{var1}# as value, the task will use the value of variable var1 to replace the #{var1}#.

So, I added a new step in my jobs to update the application.yml in the pipeline without sharing any sensible data with anybody.

steps:

- task: qetza.replacetokens.replacetokens-task.replacetokens@3

displayName: 'Replace tokens'

inputs:

targetFiles: '**/application.yml'

Conclusion

Finally, I hope this post about how to customize your pipeline in Azure DevOps can help you. If you need more info, please use the forum.

azure-pipeline.yml

# Docker

# Build and push an image to Azure Container Registry

# https://docs.microsoft.com/azure/devops/pipelines/languages/docker

trigger:

- main

resources:

- repo: self

variables:

# Container registry service connection established during pipeline creation

dockerRegistryServiceConnection: '570e2548-a092-4093-9a34-b4ba541e3997'

imageRepository: 'shinyproxyimage'

containerRegistry: $(ACRLoginServer)

dockerfilePath: '$(Build.SourcesDirectory)/DOCKERFILE'

server: $(ShinyServer)

tag: '$(Build.BuildId)'

# Agent VM image name

vmImageName: 'ubuntu-latest'

stages:

- stage: Build

displayName: Build and push stage

jobs:

- job: Build

displayName: Build

pool:

vmImage: $(vmImageName)

steps:

- task: qetza.replacetokens.replacetokens-task.replacetokens@3

displayName: 'Replace tokens'

inputs:

targetFiles: '**/application.yml'

- task: Docker@2

displayName: Build and push an image to container registry

inputs:

command: buildAndPush

repository: $(imageRepository)

dockerfile: $(dockerfilePath)

containerRegistry: $(dockerRegistryServiceConnection)

tags: |

latest

- task: SSH@0

displayName: 'SSH: stop shinyproxy'

inputs:

sshEndpoint: $(server)

commands: |

echo $(SSHPassword) | sudo -S docker stop shinyproxy

failOnStdErr: false

continueOnError: true

- task: SSH@0

displayName: 'SSH: remove image'

inputs:

sshEndpoint: $(server)

commands: |

echo $(SSHPassword) | sudo -S docker rm shinyproxy

failOnStdErr: false

continueOnError: true

- task: SSH@0

displayName: 'SSH: pull new image and run'

inputs:

sshEndpoint: $(server)

commands: |

echo $(SSHPassword) | sudo -S docker pull $(containerRegistry)/$(imageRepository)

echo $(SSHPassword) | sudo -S docker run --name shinyproxy -d -v /var/run/docker.sock:/var/run/docker.sock --net sp-example-net -p 8080:8080 $(containerRegistry)/$(imageRepository)

failOnStdErr: false

continueOnError: true

External file

# Maintainer #{Maintainer}#

proxy:

title: Open Analytics Shiny Proxy

port: 8080

authentication: openid

openid:

auth-url: https://youridentityserver/connect/authorize

token-url: https://youridentityserver/connect/token

jwks-url: https://youridentityserver/.well-known/openid-configuration/jwks

logout-url: https://youridentityserver/Account/Logout?return=https://yoururl/

client-id: #{IdSrvClientId}#

client-secret: #{IdSrvClientSecret}#

scopes: [ "openid", "profile", "roles" ]

username-attribute: aud

docker:

internal-networking: true

# url setting needed FOR WINDOWS ONLY

# url: https://host.docker.internal:2375

specs:

- id: 01_hello

display-name: Hello Application

description: Application which demonstrates the basics of a Shiny app

container-cmd: ["R", "-e", "shinyproxy::run_01_hello()"]

container-image: openanalytics/shinyproxy-demo

container-network: sp-example-net

- id: 06_tabsets

container-cmd: ["R", "-e", "shinyproxy::run_06_tabsets()"]

container-image: openanalytics/shinyproxy-demo

container-network: sp-example-net

- id: testapp

display-name: Test application

description: Docker test application

container-cmd: ["R", "-e", "shiny::runApp('/root/testApp')"]

container-image: testimage

container-network: sp-example-net

logging:

file:

opt/shinyproxy/shinyproxy.log

spring:

servlet:

multipart:

max-file-size: 200MB

max-request-size: 200MB

2 thoughts on “Customize your pipeline in Azure DevOps”